|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Citation

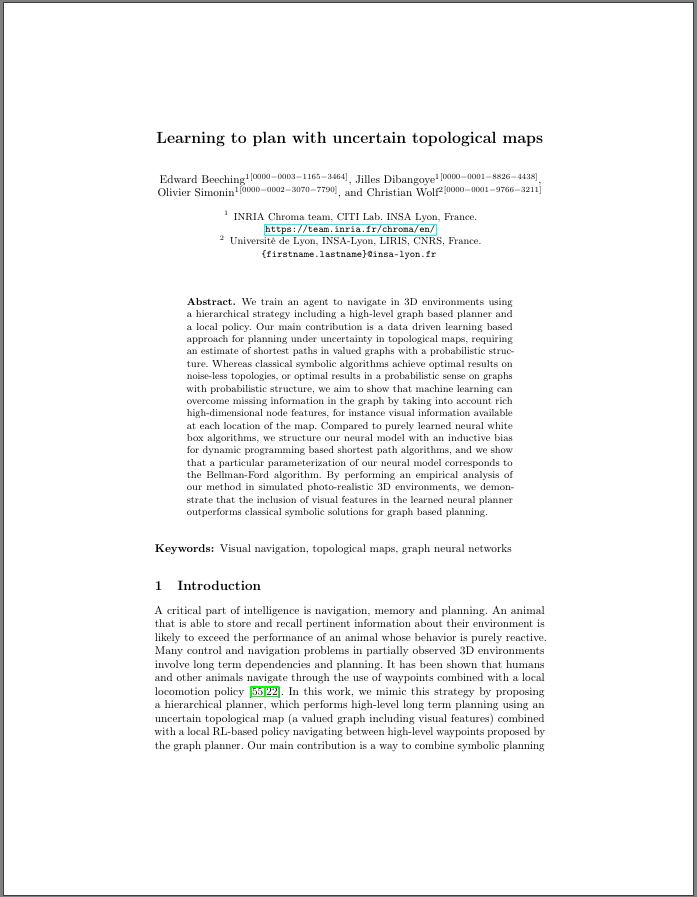

@inproceedings{beeching2020learntoplan,

title={Learning to plan with uncertain topological maps.

},

author={Beeching, Edward and Dibangoye, Jilles and

Simonin, Olivier and Wolf, Christian}

booktitle={European Conference on Computer Vision},

year={2020}}

|

|

AcknowledgementsWebsite template from here and here. |