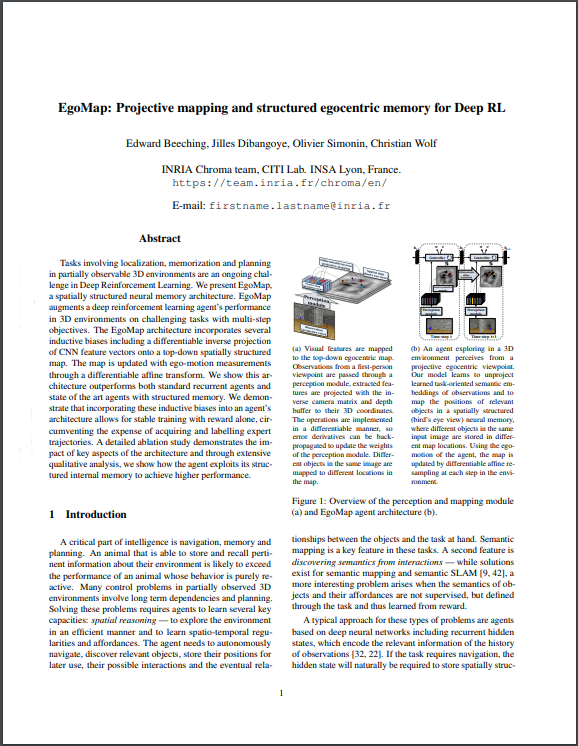

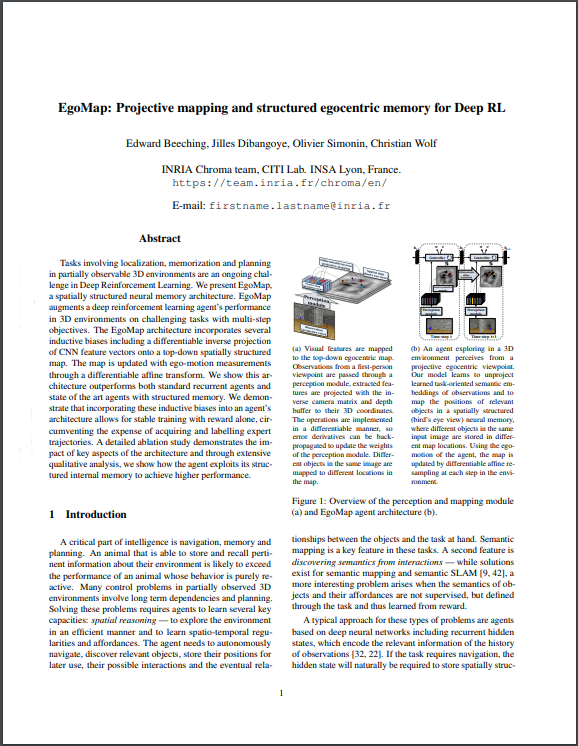

[Paper]

[Paper]

|

|

Citation

Beeching, E., Dibangoye, J.,

Simonin, O., and Wolf, C., 2020. EgoMap: Projective mapping and structured egocentric memory for

Deep RL. In proceedings of the European Conference on Machine Learning and Principles and Practice of

Knowledge Discovery

in Databases.

[Bibtex]

@inproceedings{beeching2020egomap,

title={EgoMap: Projective mapping and structured egocentric memory for

Deep RL},

author={Beeching, Edward and Dibangoye, Jilles and

Simonin, Olivier and Wolf, Christian}

booktitle={ECMLPKDD},

year={2020}}

|